How do you define maturity?

Maturity describes the degree of the formalisation and standardisation of a dataset with respect to FAIRness, completeness and accuracy of the (meta-)data. Both data and metadata mature as they pass through the different data post-production steps, which are performed by the repository. The higher the maturity, the easier it is to reuse the data. How the maturity of a dataset is specified in detail depends on the respective repository or might be defined by a community.

What is the difference between data maturity and data quality?

In our definition of maturity, maturity includes quality but also includes additional aspects such as FAIRness. For our definition of maturity please see the previous question.

Is the Maturity Indicator concept restricted to data?

No! The metadata fields of the Maturity Indicator can be filled with any checks which were performed. This holds for anything (e.g. data, software, …) for which a DOI can be allocated. In addition, the results of the maturity checks of the metadata for e.g. the data with the DOI can also be stored in the Maturity Indicator.

Are you checking the maturity of data?

No. The Maturity Indicator only provides space to store quality and FAIRness information. The actual quality/FAIRness checking has to be done by the repository/producer/… (depending on how the repository organizes this process). Also the choice of quality/FAIRness metric is a task of the repository/producer. The Maturity Indicator does not prescribe any metric.

What indicators do you use or how do you check for the FAIRness of data?

The concept of the Maturity Indicator is generic in the sense that it allows any quality or FAIRness indicator to be used. The Maturity Indicator just provides metadata properties/fields to store results of quality/FAIRness assessments.

Can the information of several maturity checks be added?

Yes!

The FAIRness degree of a given dataset may change over time, how will you manage the dynamic nature of the FAIR data maturity metadata?

We also see the issue that metrics/standards evolve over time and that the degree of standardization/FAIRness of a dataset might implicitly decrease over time. We have two “answers” for this issue. Firstly, the Maturity Indicator provides fields to store a date when a metric was applied and to store the metric version. Even if the version is old we would see this in the metadata. Respectively, we can directly see how up to date the metric/result is. Secondly, the repository is allowed to update the metadata. Hence, the repository could run a FAIRness check e.g. every 2 years and update the DataCite metadata. Thus, it is the responsibility of the repository or data producer to keep this information up-to-date.

Do you expect the data maturity report to always be generated by the organization registering the DOI for the dataset?

It depends on the metric that is applied to the dataset and the guidelines of the repository. A data producer might write a quality control report to the published data and submit it to the repository together with the dataset. In future, an external (anonymous) reviewer might write a review report to a dataset. In the near future, automatic FAIRness assessment tools might generate machine-readable FAIRness reports to a dataset that you provide them. These documents -- quality control report, review report and FAIRness report -- could be linked via the Maturity Indicator. They wouldn’t be generated by the repository. But, it could also be that the repository writes/creates/generates such a report (like the first use case in the webinar talk on the Quality Maturity Matrix).

Are you only planning to work with DataCite?

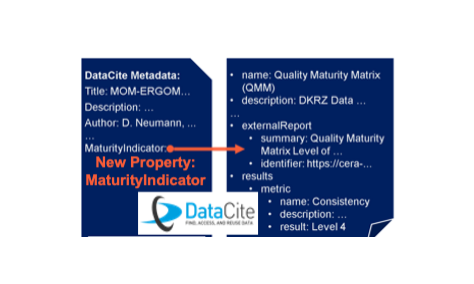

The concept of the Maturity Indicator is designed as an extension of the DataCite Metadata Schema. We plan to submit it to the DataCite Metadata Working Group within the next month. However, the concept can be adapted to any metadata schema if the provider of the specific metadata schema wants to do that.

Is there a chance to collaborate with others?

Yes definitely! Please send an e-mail to aW5mb0BhdG1vZGF0LmRl .

What about a controlled vocabulary to qualify the kind of maturity checks, e.g. self-assessment vs. peer review etc.?

Controlled vocabularies (CVs) are quite important in this context and we are working on adding CVs whenever it is possible and appropriate.